Why is it relevant?

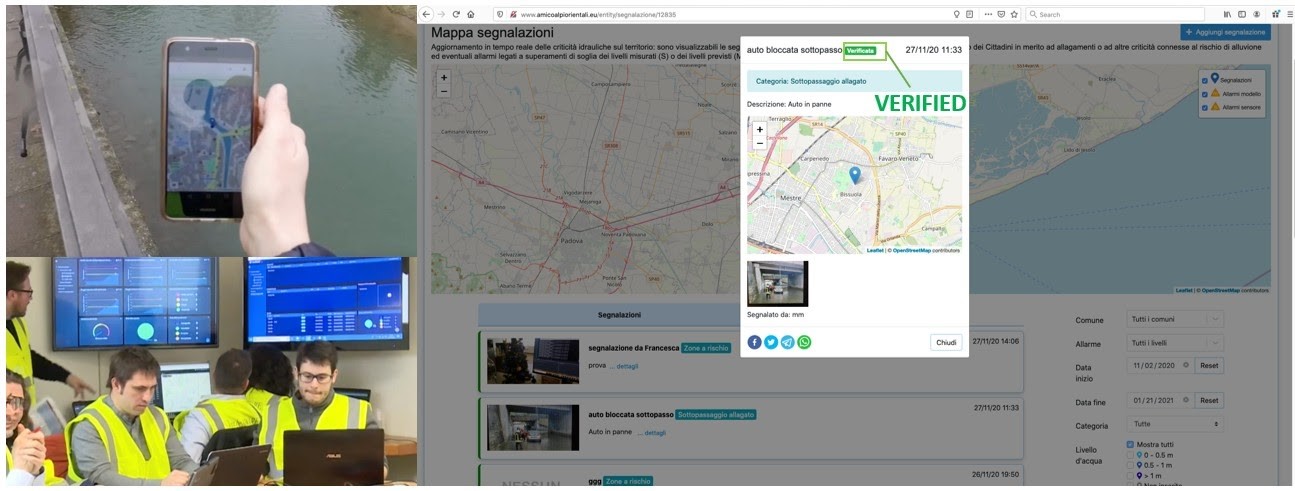

A common concern about Citizen Observatories is uncertainty regarding the quality of the collected observations. Many organisations and researchers have been asking: Can citizens provide data that are of the same quality as professional scientists? Learning and implementing best practices on how to define and expose the data quality information will lead to reliable and trustworthy data sets and will minimise uncertainties regarding the collected data.

How can this be done?

Ensuring data quality should be done according to the specific scientific discipline(s) that your Citizen Observatory needs to draw on. This varies depending on which environmental issue you are focusing on. It requires the involvement of one or more trained scientists from those fields; they can help you design the appropriate scientific methodology that ensures your data will be fit for purpose (more on how to engage key stakeholders here).

Useful Resources

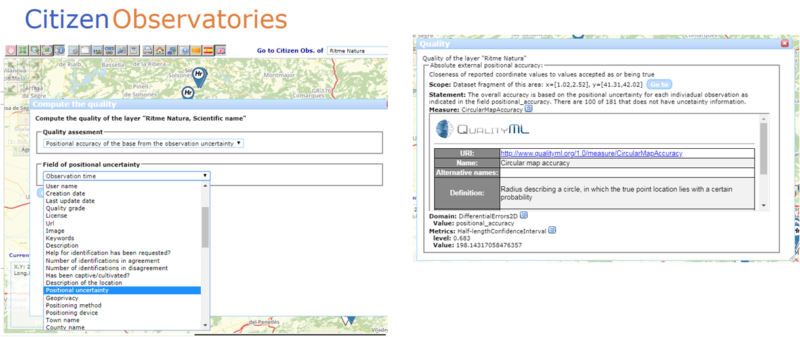

WEBINAR: “Ground Truth Week 2019 – Webinar 3.2 – Data quality and interoperability” describes the capabilities of the quality tool developed in Ground Truth 2.0 and demonstrates how to use it in a real-life scenario.

BOOK CHAPTER: “Chapter 8 Data Quality in Citizen Science” in the book “The Science of Citizen Science” discusses the broad and complex topic of data quality in citizen science and how we can ensure the validity and reliability of data generated by citizen scientists and citizen science projects.

WEBSITE: QualityML is a dictionary based on the ISO19157 that contains hierarchically structured concepts to precisely define and relate quality levels: from quality classes to quality measurements.

You may also be interested in:

I want to work with data…

…by sharing Citizen Observatory data

…by integrating data from several Citizen Observatories/other sources

I want to generate insights results from data and knowledge…

This work by parties of the WeObserve consortium is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. ![]()